Deploy a Deep Learning Model to a REST API

Deploy and scale DL models with Sagemaker, Lambda, API Gateway

Dec 9, 2021 by Yan Ding

1. Introduction

Deep learning models are usually quite big which leads to expensive deploying or scaling. Seeking Cloud as a solution to deploy DL models is a good choice. It is more flexible at least you will not need to buy servers, GPUs and maintain those hardwares. It costs less especially when you just want to have a try.

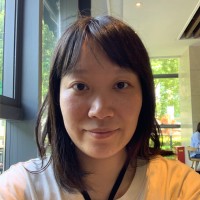

AWS Cloud is well known. Many people like to host apps on AWS Cloud. HuggingFace has APIs for deploying deep learning models on AWS. You can check the docs on their website. But after we get the endpoint, how can we send requests and get responses. We will need a REST API. Because endpoint in Sagemaker is not public. We will need Lambda and API Gateway to build our REST API.

Let's take a look at the whole process to get the REST API.

2. Sagemaker

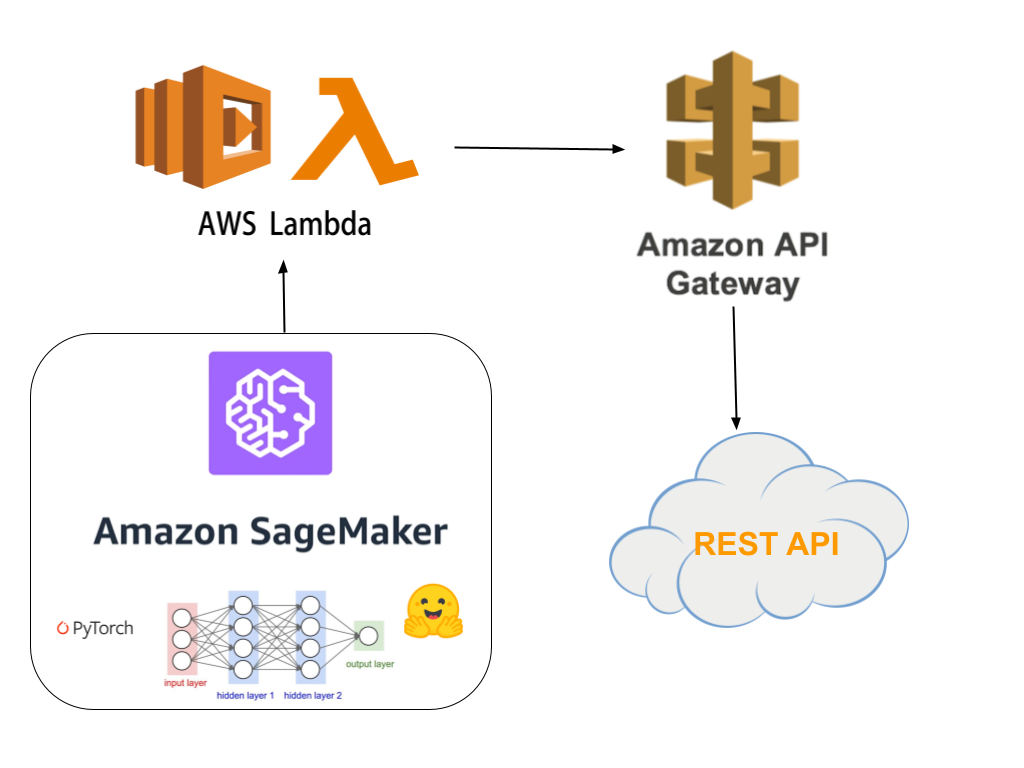

First let's take a look at Sagemaker. Suppose we want to deploy a translate model which can tranlate English to German from HuggingFace to sagemaker.

We follow the Huggingface's docs as following:

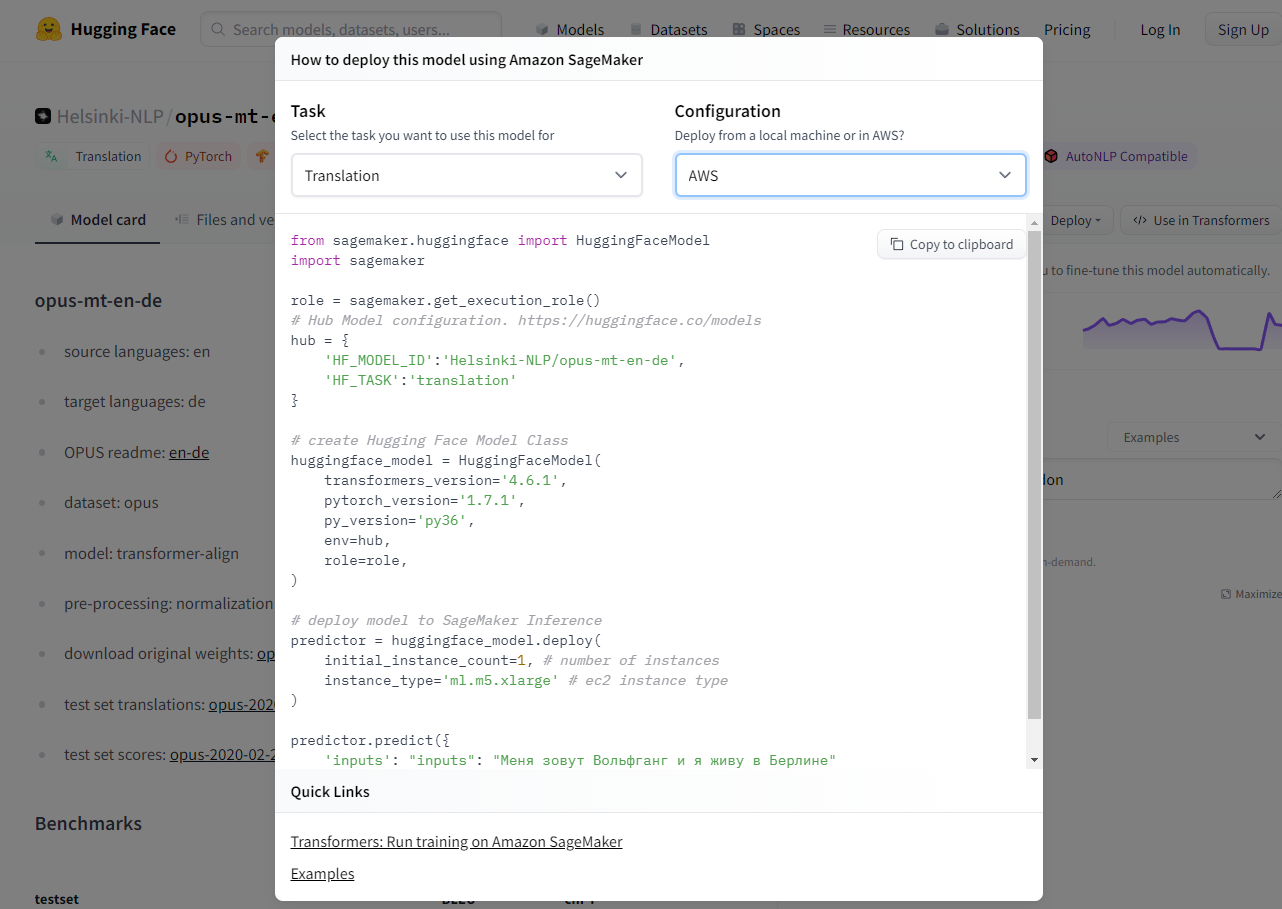

We open Sagemaker in AWS management console. Here we can choose the Notebook and create a Notebook instance as follows.

We can change the instance type to m5.xlarge to meet the demand of translate model. Please be aware that the instance will be in charge when you start it. So don't forget to stop it if you don't need to use it.

After start the instance, we can paste the code from Huggingface's docs to the notebook:

from sagemaker.huggingface import HuggingFaceModel import sagemaker role = sagemaker.get_execution_role() # Hub Model configuration. https://huggingface.co/models hub = { 'HF_MODEL_ID':'Helsinki-NLP/opus-mt-en-de', 'HF_TASK':'translation' } # create Hugging Face Model Class huggingface_model = HuggingFaceModel( transformers_version='4.6.1', pytorch_version='1.7.1', py_version='py36', env=hub, role=role, ) # deploy model to SageMaker Inference predictor = huggingface_model.deploy( initial_instance_count=1, # number of instances instance_type='ml.m5.xlarge' # ec2 instance type ) predictor.predict({ 'inputs': "inputs": "Меня зовут Вольфганг и я живу в Берлине" })

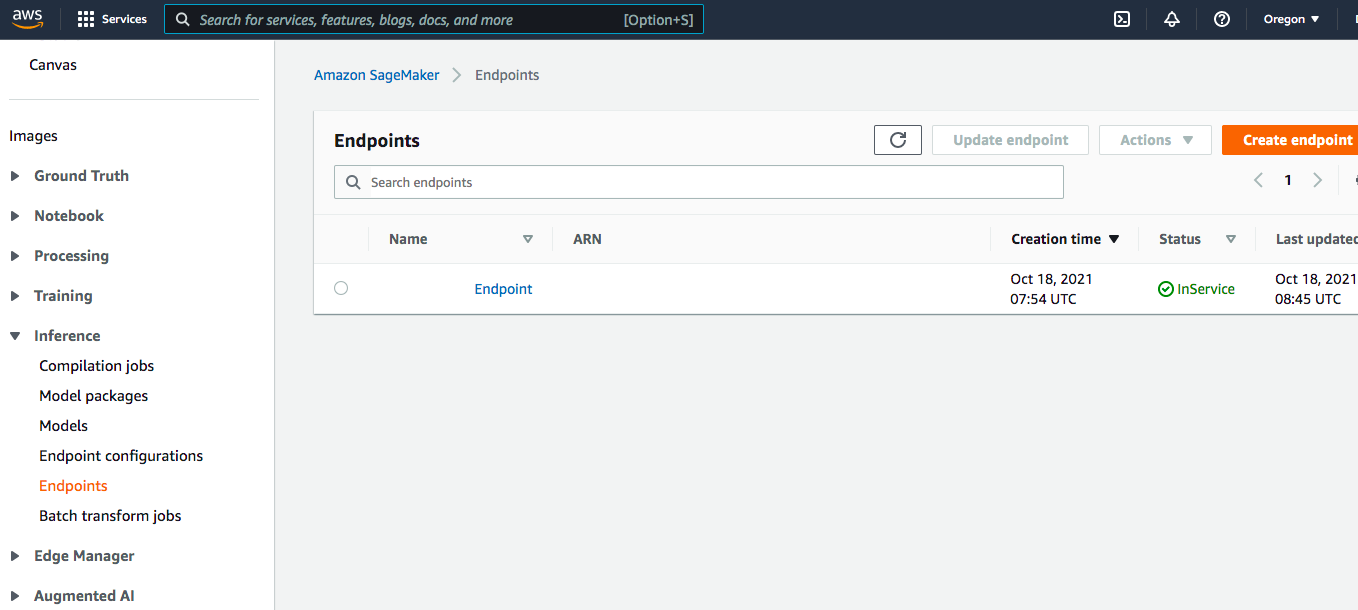

The method of huggingface_model.deploy() will create a real-time endpoint in Sagemaker as follows.

Now we have the endpoint in Sagemaker but it cannot be accessed by the public. We will need to invoke it from Lambda.

3. Lambda

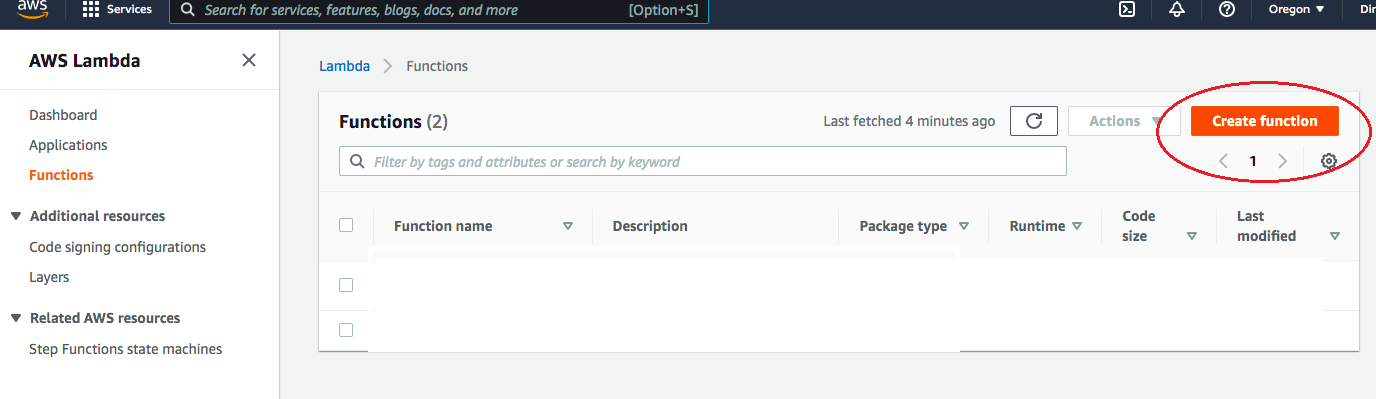

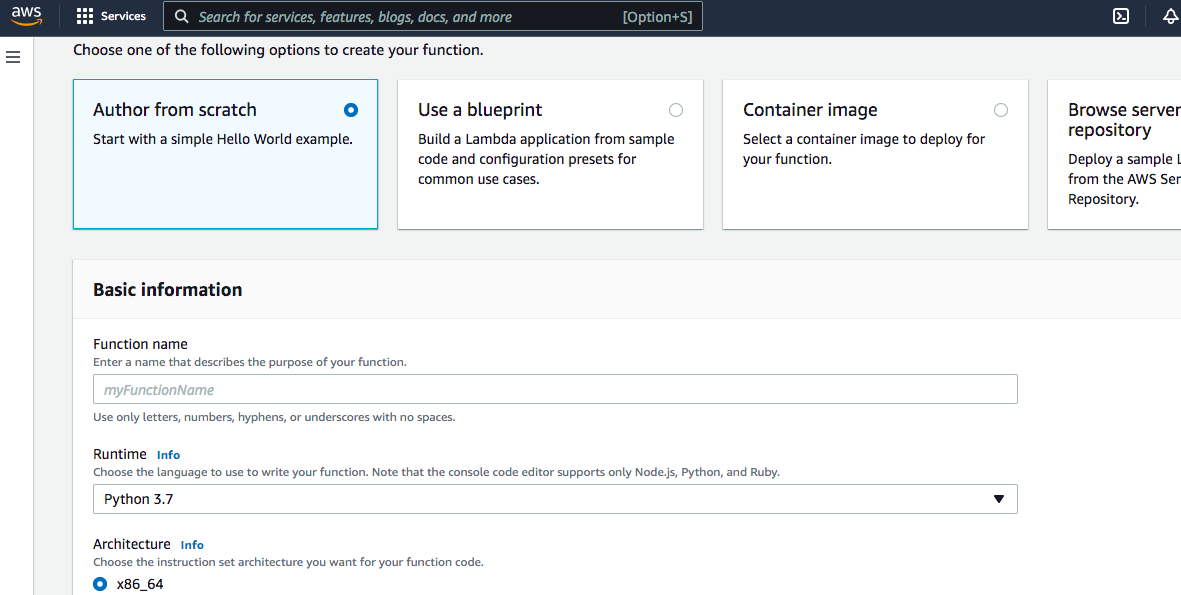

Open Lambda in AWS management console. Create a new function. We could choose python3.7 for runtime.

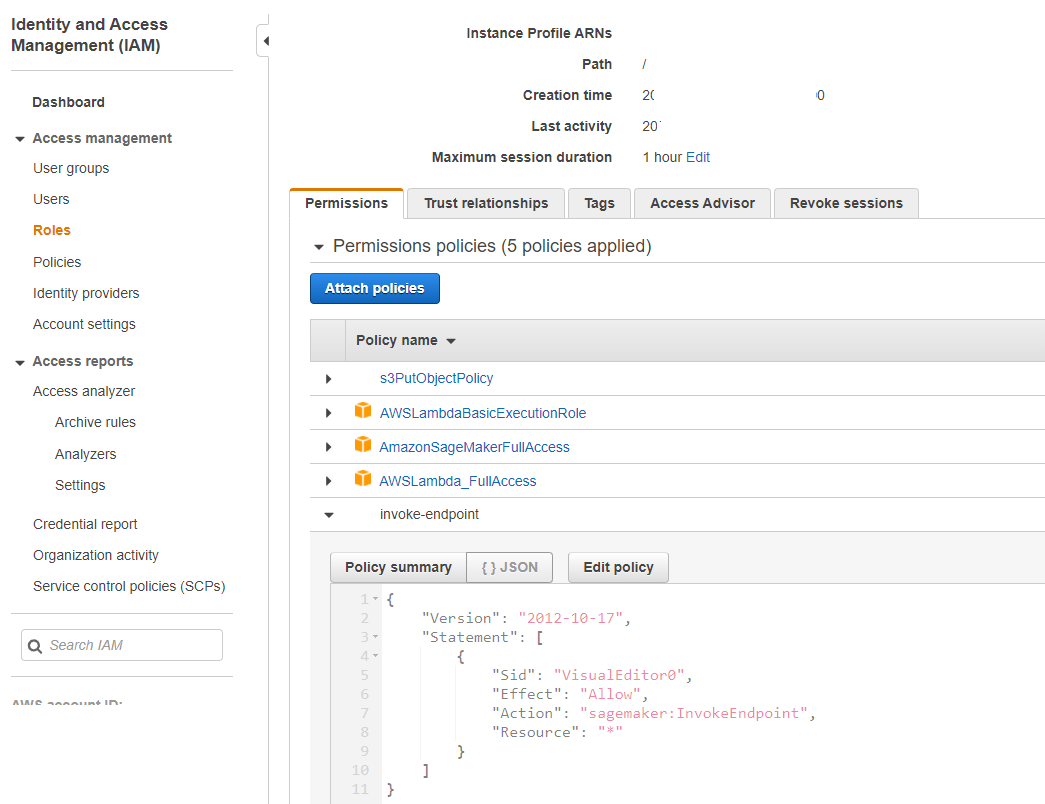

We also need to configure the execuction role here. First create a new role in IAM for Lambda and attach policies including 'invoke-endpoint' as below. If you need more detailed tutorial, you could refer to this blog:How to Create New Roles in AWS IAM

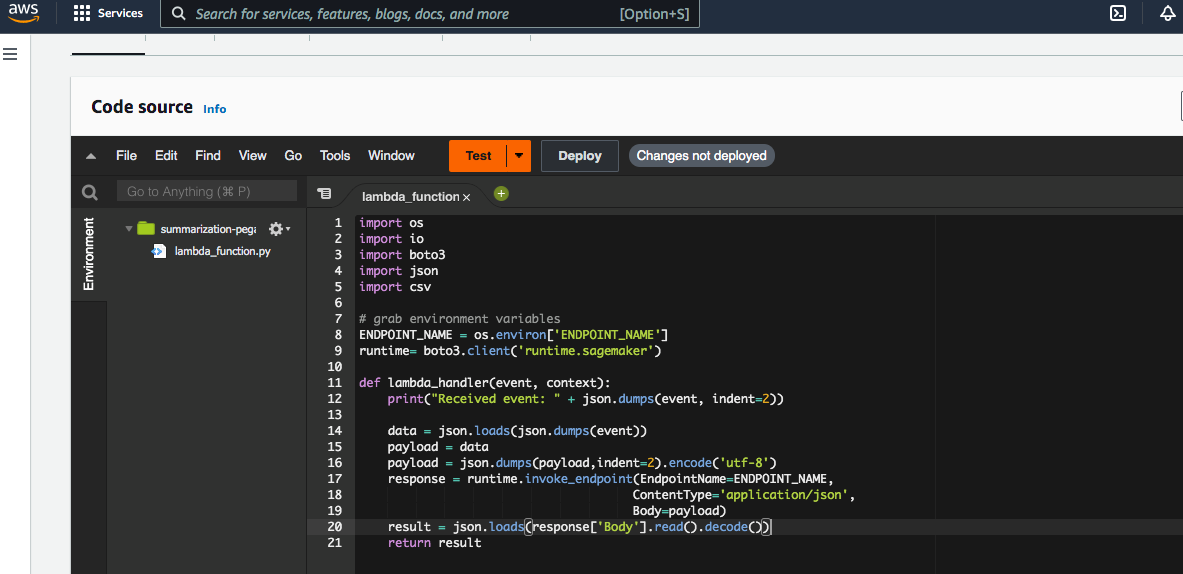

Click 'change the default execuction role' and select this new role and click create function. We can edit the code source as follows. Please name the environment parameter of 'ENDPOINT_NAME' to be the endpoint name in Sagemaker which we just created.

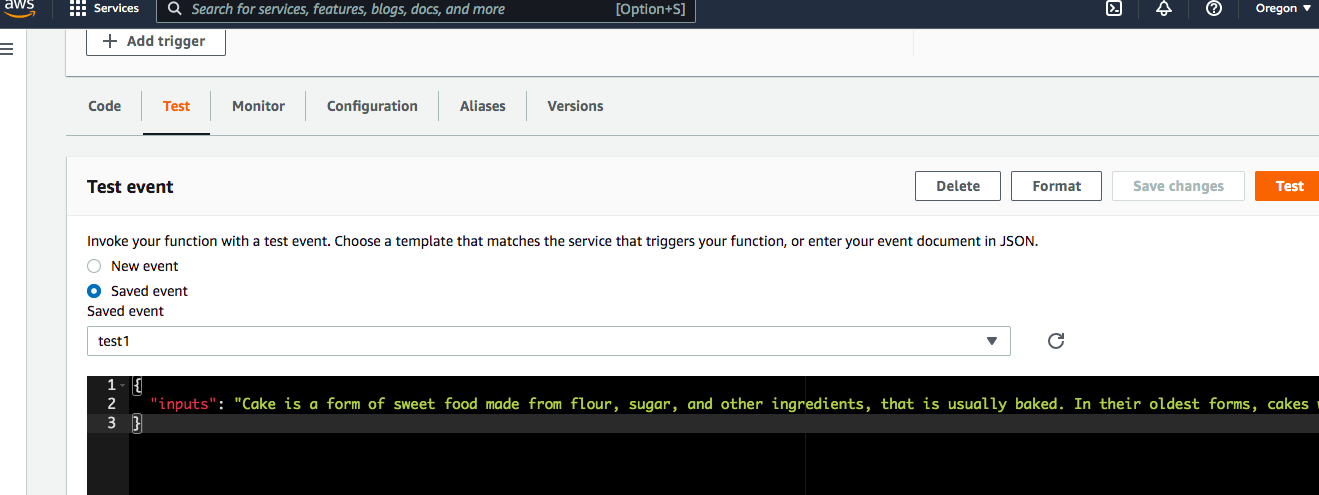

You can also first test it to see if it can work correctly. Edit the event and save it.

It should work the same as in Sagemaker. But now we brought it to Lambda. In addition, if you need to install other packages, you can add layers in Lambda.

Right now, it is not a REST API because we still cannot send requests via Postman for example. We need API Gateway.

4 API Gateway

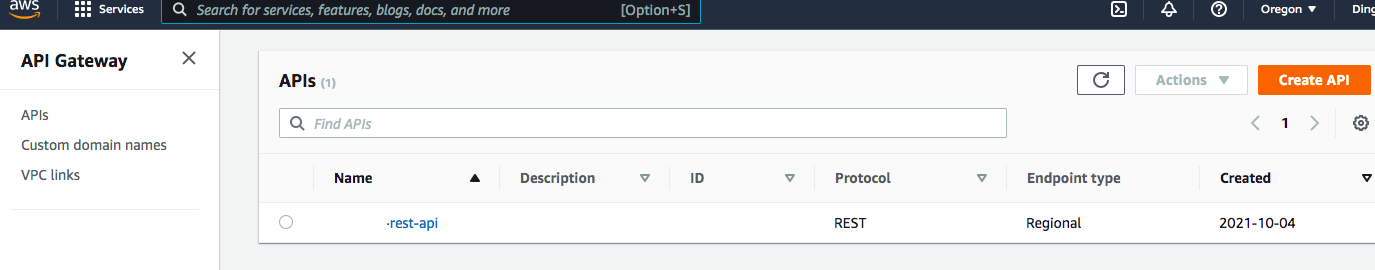

Open API Gateway in AWS console. Click create API. Choose REST API.

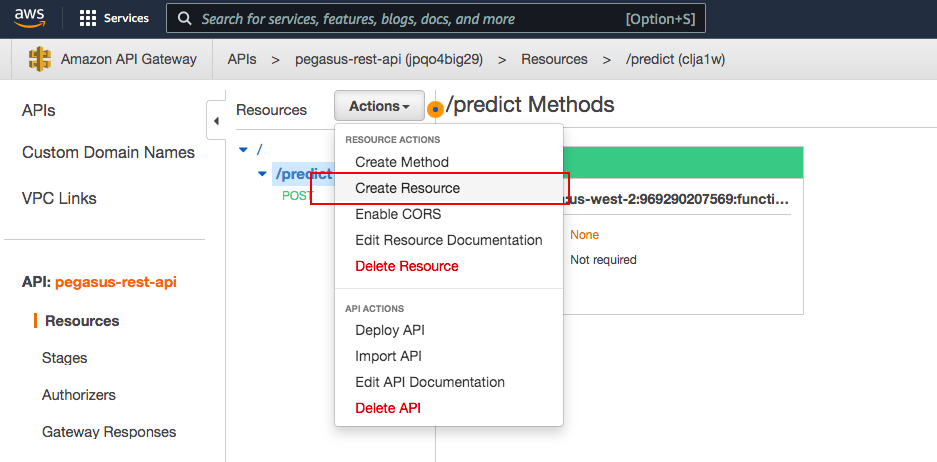

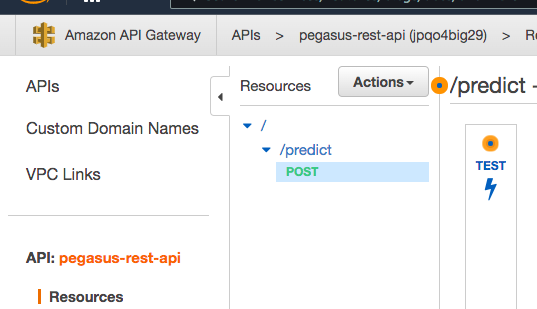

In action, click create resource and we name it as predict.

And in this resource, we add a new method of POST.

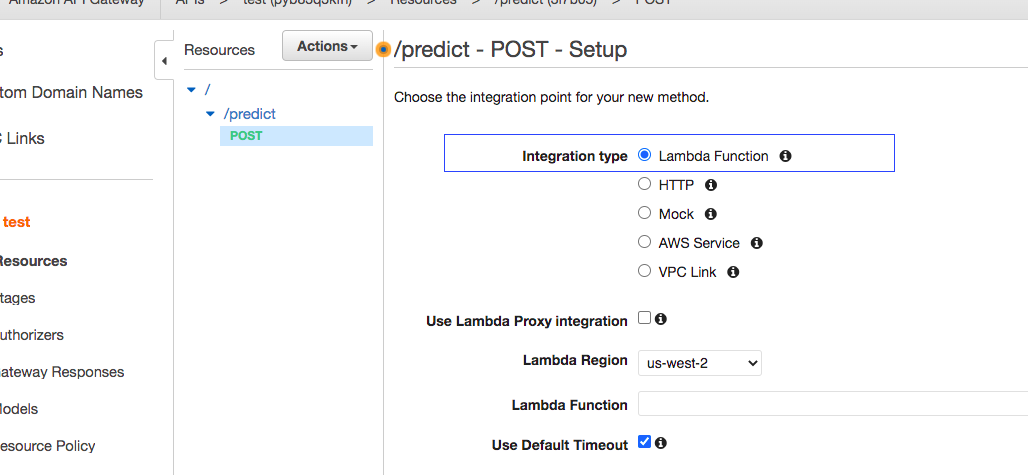

Choose the Lambda function we just created.

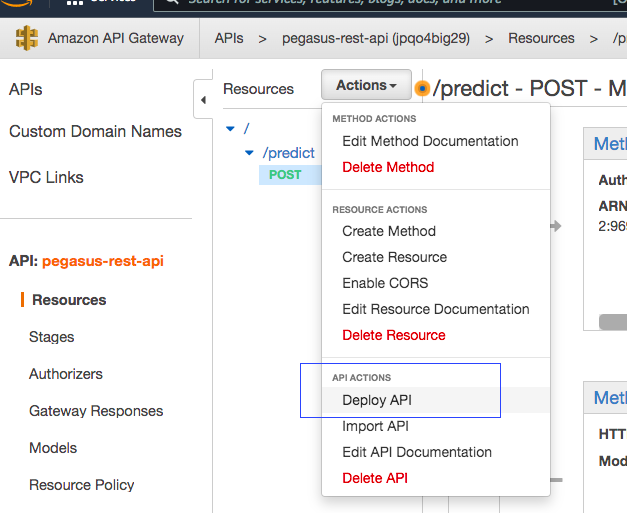

You can do some test in it and then deploy API.

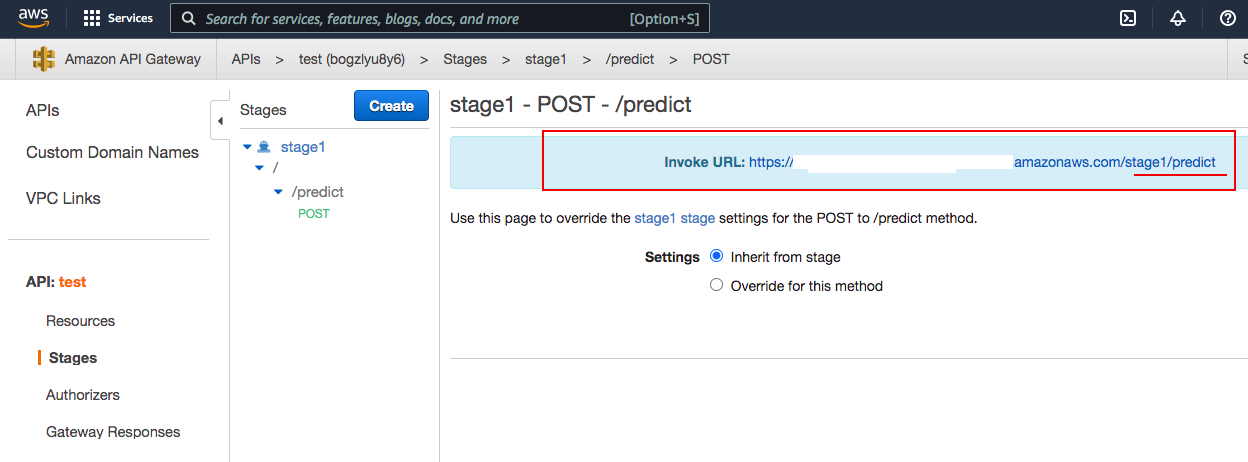

After deploy, we can get invoke URL as follows.

5 Test REST API

We can use Postman to test the url. Or we can install an Extension named “Thunder Client” in Visual Studio Code. You should get the response from the API Gateway.

6 Summary

To sum up, we went through how to deploy deep learning model to AWS Cloud with Sagemaker, Lambda and API Gateway. By the way, AWS has very detailed role management so do not forget to create roles in IAM. If you have any question, please leave your comments below. Thank you for reading!